Manage Integrations

In the process of utilizing AutoMQ Cloud products, users can streamline data exchanges between instances within their environment and external systems through its integration features.

This article explores how to leverage integration functionality.

Throughout This Article, Any References to the AutoMQ Product Provider, AutoMQ Service Provider, or AutoMQ Specifically Refer to "AutoMQ CO."

Integration Types

| Integration Type | Data Type | Applicable Scenarios |

|---|---|---|

| Prometheus |

|

|

| Apache Kafka |

|

|

Setting up Prometheus Integration

Prerequisites

The core concept behind the Prometheus integration feature in AutoMQ Cloud is that each data node within the cluster directly transmits Metrics data to the Prometheus HTTP interface using the OpenTelemetry protocol. Consequently, the Prometheus service provided by the user must be compatible with the OT protocol.

Integrating with a Self-hosted Prometheus Service

According to Prometheus's version notes, the feature stipulates that the user's Prometheus service must satisfy the following criteria:

The version of Prometheus should be no older than 2.47.

During cluster deployment, it is essential to activate the configuration "--enable-feature=otlp-write-receiver" in the startup command.

Configuration example:

Start Prometheus service: ./prometheus --config.file=xxxx.yml --enable-feature=otlp-write-receiver

When using a self-hosted Prometheus service, set the OTLP endpoint as:

http://${your_ip}:9090/api/v1/otlp/v1/metrics

If your self-hosted Prometheus service is based on the commercial version of VictoriaMetrics, ensure that the version of VictoriaMetrics is at least 1.92.0 and configure the entry point according to the documentation on configuring access.

Integration with Cloud Provider's Prometheus Service

If you are using a commercial version provided by a public cloud provider, it is recommended to consult the technical staff of the cloud provider. For example, Alibaba Cloud's Prometheus service offers out-of-the-box support for OT protocol, see the OT protocol data reporting documentation.

Operational Steps

To create a Prometheus integration, follow these steps:

- Go to the Integrations page and create a new integration. Access the environment console, click on Integrations in the left navigation bar, and enter the Integrations page. Click on Create Integration and enter the following information as prompted to complete the creation.

| Parameter | Example Value |

|---|---|

| Integration Name | Enter a distinctive alias for the integration configuration items, specific restrictions can be referred to Restrictions▸. |

| Integration Type | Select Prometheus Service |

| Prometheus OpenTelemetry Write Interface | In the AutoMQ Cloud environment, the OpenTelemetry protocol is used to directly write Metrics data from various instances into a designated Prometheus cluster. Users need to provide this protocol interface. |

| Username | If ACL authentication is enabled on the Prometheus service, configure the username. |

| Password | If ACL authentication is enabled on the Prometheus service, configure the password. |

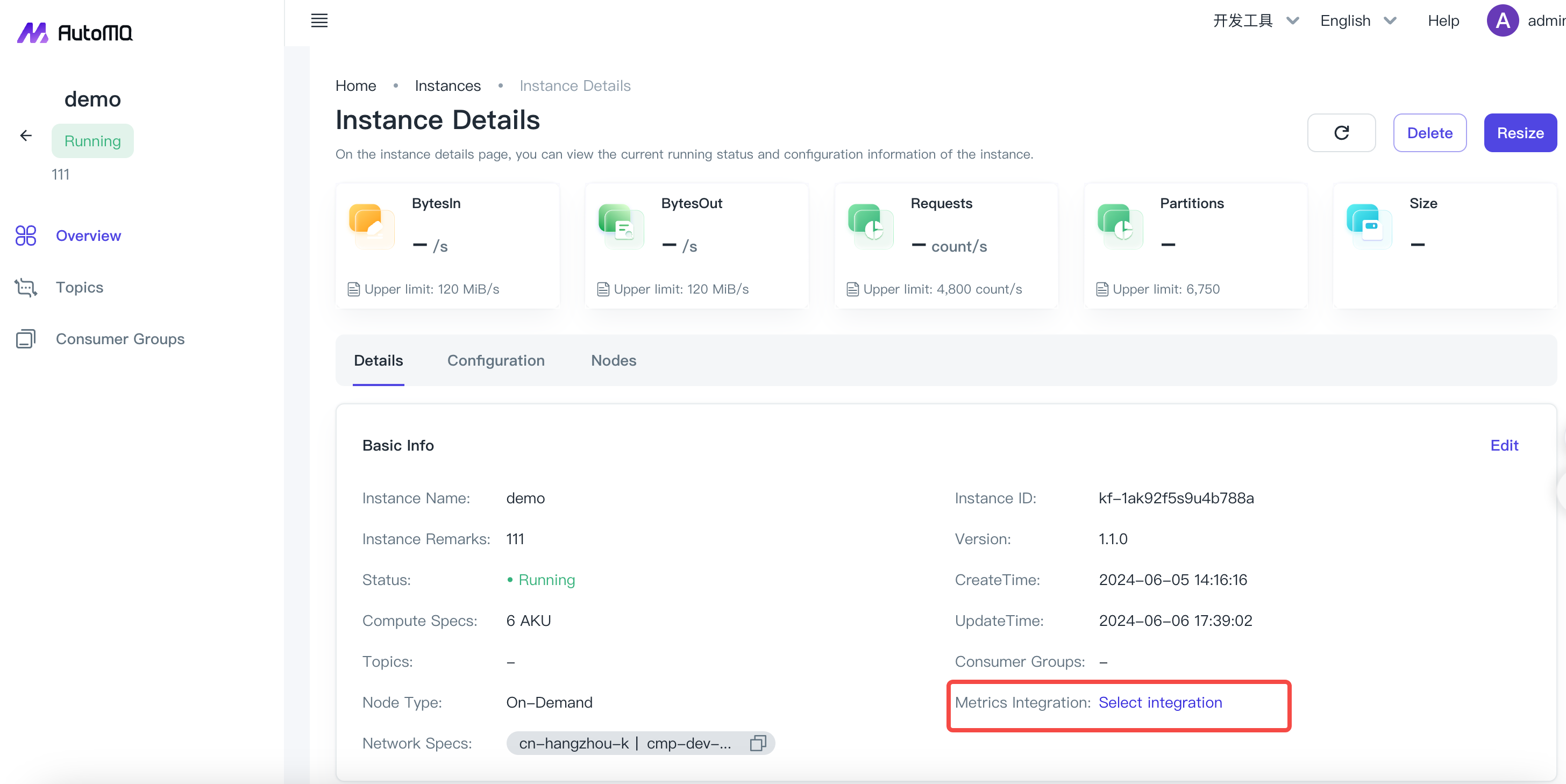

- Navigate to the instance details page, and reference the integration configuration. For instances that require configuration, proceed to the specific instance details page, link the integration set up in the initial step, and activate the configuration; subsequently, check for any data reported to the Prometheus service.

Refer to AutoMQ Cloud's Metrics data definitions at Monitoring AutoMQ for Kafka▸.

Create Apache Kafka® Integration.

Prerequisites

The Apache Kafka integration feature in AutoMQ Cloud operates by connecting to external Kafka clusters (including other Kafka-compatible versions) via the Kafka Connector component. This enables real-time synchronization and reassignment of messages and other data. Ensure the following:

The version of the external Apache Kafka cluster must be 0.9.x or higher.

There should be a robust connection between the external Kafka cluster and the AutoMQ network.

Operational Steps

To set up an Apache Kafka integration, proceed as follows:

- Go to the integration list page and create a new integration. Navigate to the environment control panel, select Integrations from the left sidebar to access the integration list page. Click Create Integration and follow the on-screen prompts to enter the information below to finalize the creation.

| Parameter | Example Value |

|---|---|

| Integration Name | Enter a distinctive alias for the integration settings; see restrictions for details. |

| Integration Type | Select Apache Kafka Service |

| Access Protocol | The client access protocol for connecting to external Kafka clusters. Currently supported: |