Partition Reassignment in Seconds

Partitions are the core resource model in Apache Kafka, serving as endpoints for client read and write traffic. As the number of partitions in a cluster increases, issues like uneven partition distribution and hotspot partitions may arise. Therefore, performing partition reassignment is a routine and frequent operational task in managing Apache Kafka.

However, in the Shared Nothing architecture of Apache Kafka, partition reassignment relies on replicating a large amount of data. For instance, consider a Kafka partition with 100MiB/s traffic; it generates about 8.2T of data per day. If this partition needs to be reassigned to another broker, the entire dataset must be replicated. Even with nodes that have 1GBps bandwidth, it would take hours to complete the reassignment, making Apache Kafka clusters almost incapable of real-time elasticity.

Thanks to AutoMQ's shared storage architecture, only a small amount of data needs to be synchronized during actual partition reassignment, making it possible to reduce the reassignment time to seconds.

How AutoMQ Achieves Second-Level Partition Reassignment

In AutoMQ's shared storage architecture, almost all data is stored in object storage, with minimal data temporarily residing in WAL (Write-Ahead Logging) storage. During partition reassignment, only the data that has not yet been uploaded to object storage and temporarily resides in the WAL needs to be forcibly uploaded. Once this is done, the partition can be safely transferred to another node, usually within approximately 1.5 seconds.

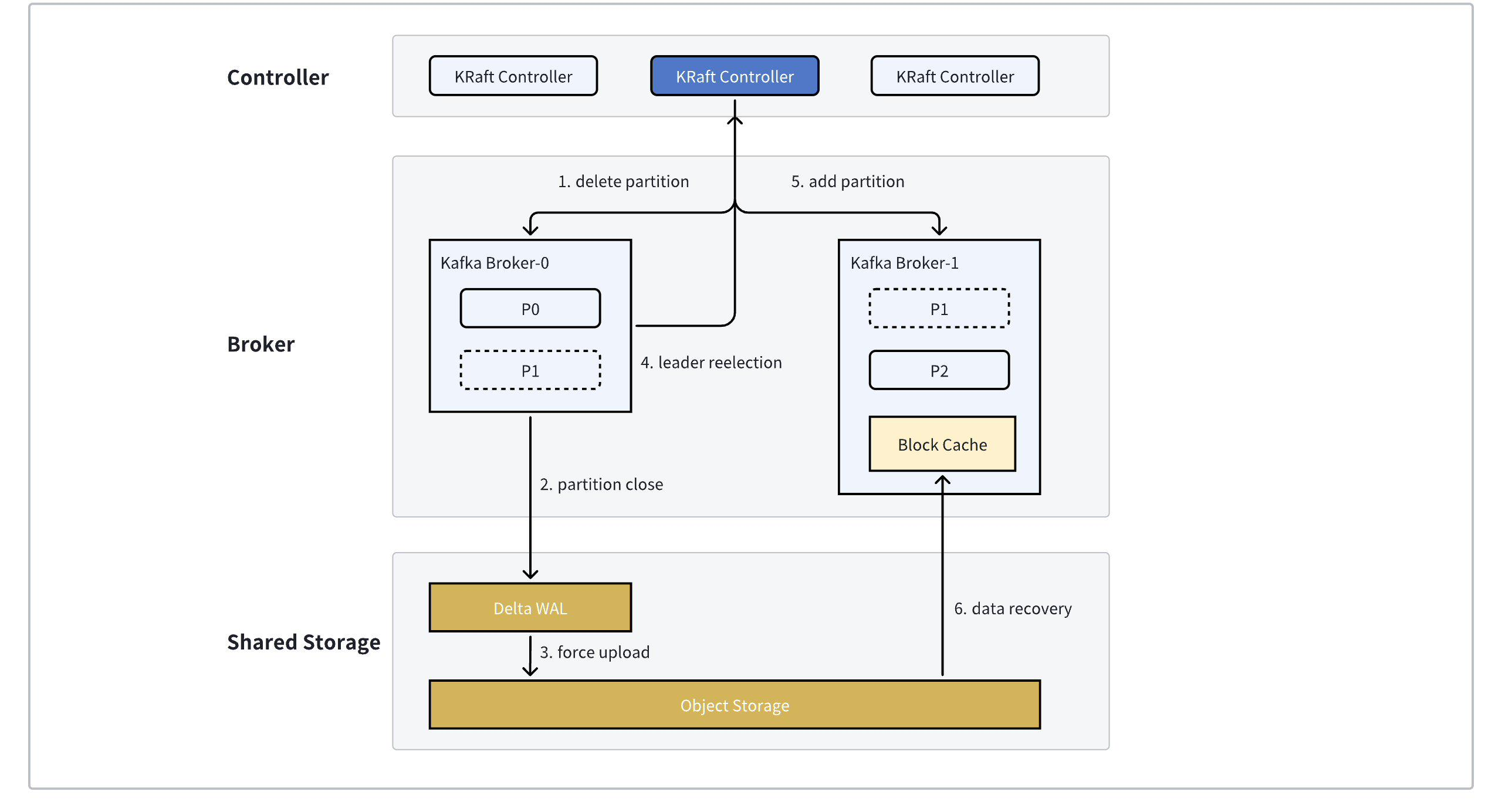

The specific reassignment process is shown in the above diagram. Taking the reassignment of partition P1 from Broker-0 to Broker-1 as an example:

When the KRaft Controller receives the partition reassignment command, it constructs the corresponding PartitionChangeRecord and commits it to the KRaft Log layer. Broker-0 is removed from the Leader Replica list, and Broker-1 is added to the Follower Replica list. Broker-0, upon syncing the KRaft Log and detecting the change in partition P1, enters the partition shutdown process.

During partition shutdown, if partition P1 still has data that has not been uploaded to object storage, a forced upload will be triggered. In a stable running cluster, this data is typically around hundreds of MB. Given the burst network bandwidth capabilities provided by current cloud providers, this process generally takes only seconds. Once the data for P1 is uploaded, the partition can be safely shut down and partition P1 can be removed from Broker-0.

After the Broker completes shutdown, it will proactively trigger a leader election. At this point, Broker-1, as the only Replica, is promoted to Leader of P1, entering the partition recovery process.

During partition recovery, metadata corresponding to P1 will be pulled from object storage to restore the corresponding Checkpoint of P1. Subsequently, data recovery is performed based on P1's shutdown status (whether it was a Cleaned Shutdown).

At this point, the partition reassignment is complete.

Significance of Second-Level Partition Reassignment

In a production environment, a Kafka cluster typically serves multiple business applications. The traffic fluctuations and partition distribution of these applications may lead to insufficient cluster capacity or machine hotspots. Kafka administrators need to expand the cluster and reassign hotspot partitions to idle nodes to ensure the cluster's service availability.

The time it takes to reassign partitions determines the efficiency of emergency response and operations:

The shorter the reassignment time, the shorter the time from cluster expansion to meeting capacity demands, and the shorter the service disruption time.

The faster the reassignment, the less time operations personnel spend monitoring, allowing for quicker operational feedback and subsequent actions.

In the architecture of AutoMQ, second-level partition reassignment forms the foundation for various automation capabilities, including auto-scaling, down-scaling, and continuous self-balancing of traffic.

Quick Start

Refer to Example: Partition Reassignment in Seconds▸ to experience AutoMQ's second-level partition reassignment capabilities.