Save Cross-AZ Traffic Costs with AutoMQ

AutoMQ's S3-based shared storage architecture can avoid cross-AZ replica replication within the server and cross-AZ data write traffic for Producers. This document explains how to use AutoMQ to save on cross-AZ transmission traffic.

In this document, AZ stands for Availability Zone, specifically referring to zones provided by cloud providers in a public cloud environment. Each independent AZ may be backed by independent data center facilities.

Problem Background

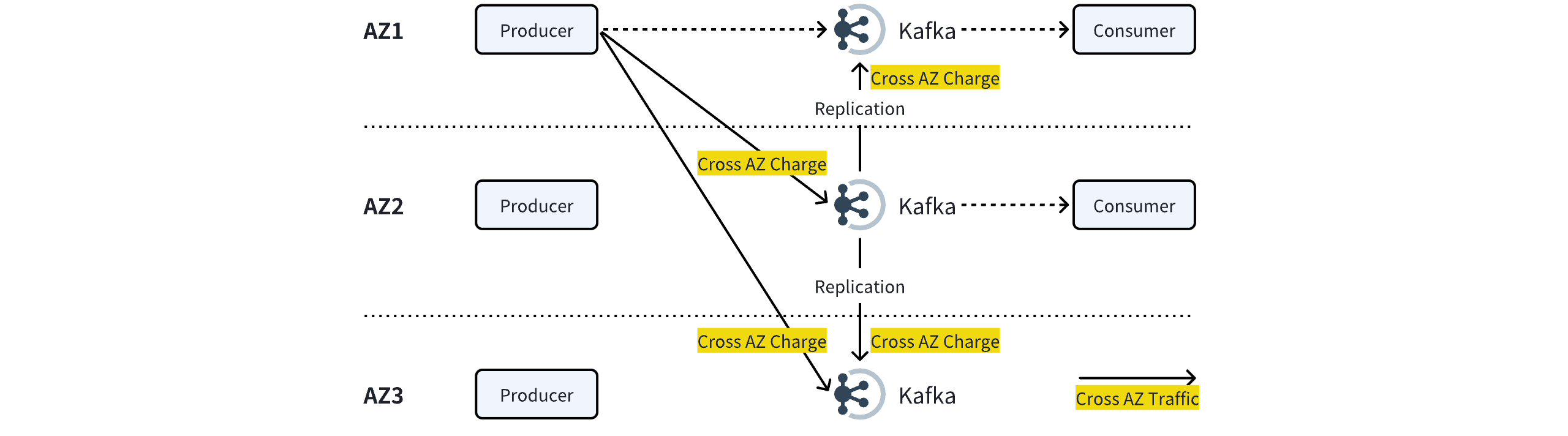

Apache Kafka Cross-AZ Traffic Analysis

When users deploy Apache Kafka across multiple AZs in AWS or GCP cloud environments, although multi-AZ disaster recovery can be achieved, it also brings cross-AZ data transmission costs. In large-scale clusters, traffic costs can account for 60-70%.

Cross-AZ Production Traffic: Assuming the Producer does not set ShardingKey and partitions are evenly distributed across cluster nodes, at least 2/3 of the Producer traffic will be sent across AZs. For example, a Producer in AZ1 sends 1/3 of its traffic to both AZ2 and AZ3.

Server-Side Cross-AZ Replication Traffic: After receiving a message, a Kafka Broker will replicate the data to Brokers in other AZs to ensure high data durability, resulting in 2x the cross-AZ traffic of the Produce operation.

Cross-AZ Consumption Traffic: Consumers can set client.rack to consume partitions/replicas in the same AZ to avoid generating cross-AZ traffic.

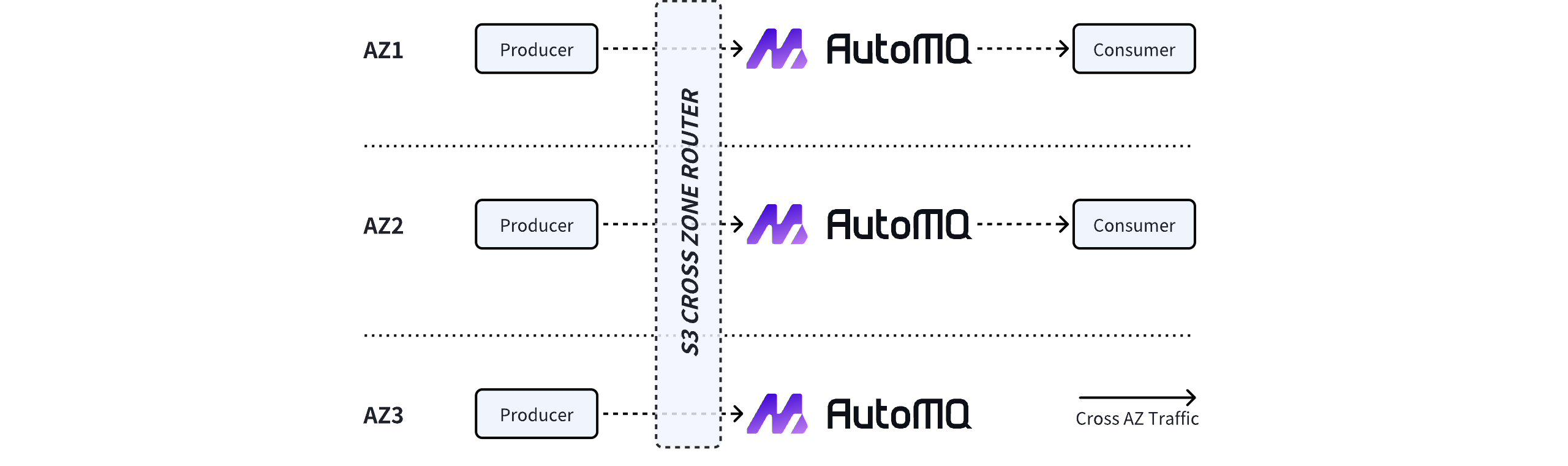

AutoMQ Multi-Point Write Architecture Saves Cross-AZ Transmission

AutoMQ leverages S3 to implement a storage-compute separation architecture. S3, acting as a shared storage layer, provides multi-AZ data durability, eliminating the need for cross-AZ replica replication. This saves the server-side replication traffic depicted in the Apache Kafka diagram above.

On the other hand, the AutoMQ Broker is a stateless architecture. By intercepting Kafka client requests and recognizing cross-AZ Produce requests, it proxies the requests based on S3 to achieve multi-point writes for Kafka partitions. Each AZ's Broker can provide PRODUCE services for any partition, ultimately eliminating cross-AZ traffic costs between the Producer and Broker.

Prerequisites

As analyzed above, in cloud environments such as AWS and GCP, cross-AZ transmission incurs traffic costs. Using AutoMQ can avoid the traffic costs associated with Kafka's production, consumption, and server-side replica replication.

To configure AutoMQ to save on cross-AZ traffic transmission, the following conditions must be met:

Cloud provider environment constraints: Currently, it is recommended to configure AutoMQ multi-point writing only when performing multi-AZ deployment in AWS and GCP environments. If the application architecture is single AZ, or if using other cloud providers (where cross-AZ transmission is temporarily free), configuration is not recommended.

Multi-AZ deployment constraints: To save on cross-AZ transmission traffic using AutoMQ multi-point write architecture, both the application and AutoMQ must support multi-AZ deployment.

AZ Quantity Constraints: Currently, only Producer, Consumer, and AutoMQ support deployment across three AZs. If the Producer and Consumer span more than three AZs, there will still be some cross-AZ traffic.

AZ Capacity Balancing Constraints: Currently, only AZ balanced deployment is supported, meaning the capacity of Producer and Consumer applications must be the same in each AZ. If the capacity is unbalanced, there will still be some cross-AZ traffic.

Operating Steps

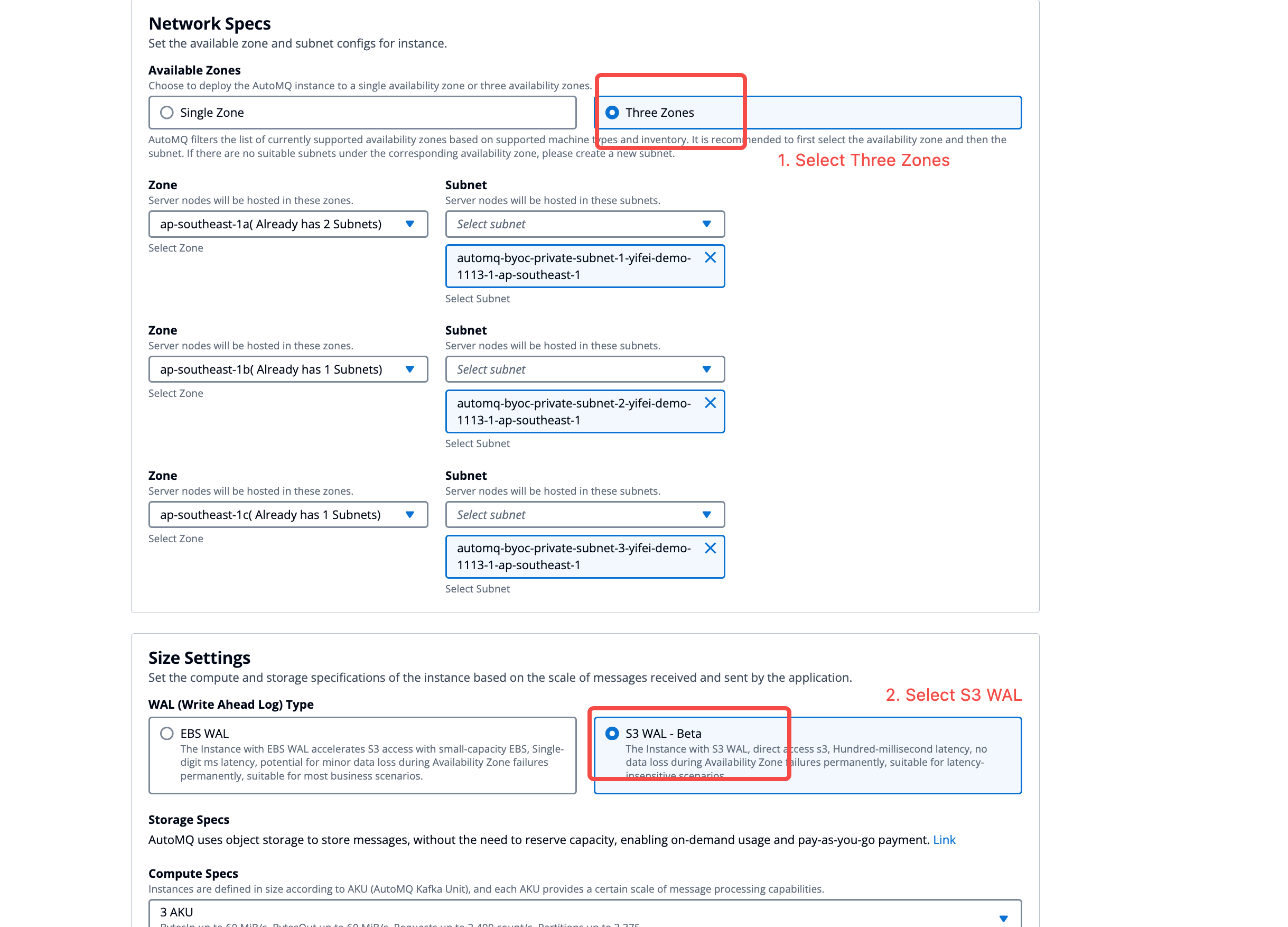

AutoMQ Instance Configuration

To minimize cross-AZ traffic using AutoMQ, you must first ensure that you create a compliant AutoMQ instance (WAL type must be created as S3WAL). The specific steps are as follows:

Log in to the AutoMQ BYOC environment.

Create an instance, and in the network configuration, select three availability zones. In the storage specification, choose S3WAL for the WAL type.

Note:

When creating an AutoMQ instance, the AZ must match the deployment region of the Kafka producers and consumers, ensuring that the capacity is balanced across all AZs. If the capacity is unbalanced, there may still be a certain proportion of cross-AZ transmission traffic.

Example: If Kafka producers and consumers are deployed in regions A, B, and C, and the application capacity in these three regions is the same, then when creating an AutoMQ instance, choose regions A, B, and C as well.

Client Configuration

After the AutoMQ instance is created, modify the producer and consumer configurations according to the application's change process to save cross-AZ transmission traffic for producers and consumers.

Producer Configuration

AutoMQ provides the capability for multi-AZ, multi-point writing in partitions, ensuring that producers only send messages to the AutoMQ instance in the same AZ. This eliminates cross-AZ traffic for producers. To enable the AutoMQ server to recognize the AZ of the producer, add the following settings in the producer's initialization configuration.

Kafka Producer needs to add the following configuration items: clientID: automq_type=producer&automq_az=<client_az>

automq_type=producerindicates that this client is a producer.automq_az=<client_az>identifies the AZ in which the client is located.

A complete example of setting a producer clientID with AZ information:

...

props.put(ProducerConfig.CLIENT_ID_CONFIG, String.format("automq_type=producer&automq_az=%s", getCurrentAz()));

...

producer = new KafkaProducer<>(props);

Consumer Configuration

AutoMQ supports KIP-881: Rack-aware Partition Assignment for Kafka Consumers, whereby consumers (client version >= 3.5.0) use the Rack-aware partition assignment strategy to consume partitions within the same AZ, eliminating cross-AZ consumer traffic.

Below is an example of configuring a Rack Aware consumer:

...

props.put(ConsumerConfig.CLIENT_RACK_CONFIG, getCurrentAz());

...

consumer = new KafkaConsumer(props);

Note:

To configure the AZ-aware feature for the Kafka Consumer above, it is recommended to ensure the client version >= 3.5.0.